Introducing Syndica Cloud: Our V2 RPC Implementation

Introducing Syndica Cloud: Our V2 RPC Implementation

This post is Part 1 of our series introducing Syndica Cloud, our advanced web3 native cloud stack on Solana. It highlights the V2 implementation of key components in our RPC infrastructure. Stay tuned for upcoming posts exploring other new features like Advanced Rate Limiting, ChainStream API, App Deployments, Dynamic Indexing, and more.

Our RPC V2 Reimplementation

Building on our experience with Solana RPC since 2021, we’ve re-engineered core components of our infrastructure to offer a best-in-class developer experience. Here, we highlight these advancements in our core infrastructure, Edge Gateway, metrics, and analytics.

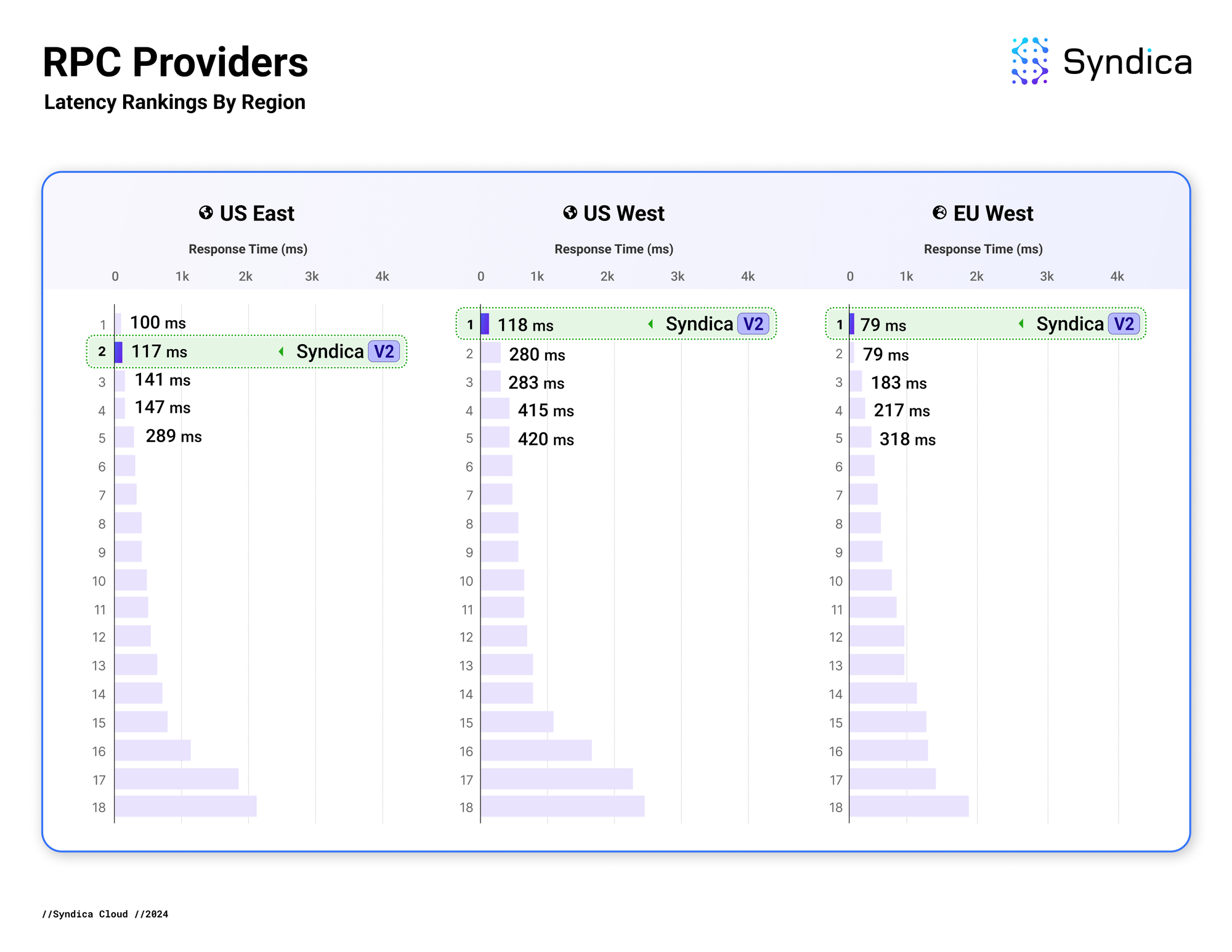

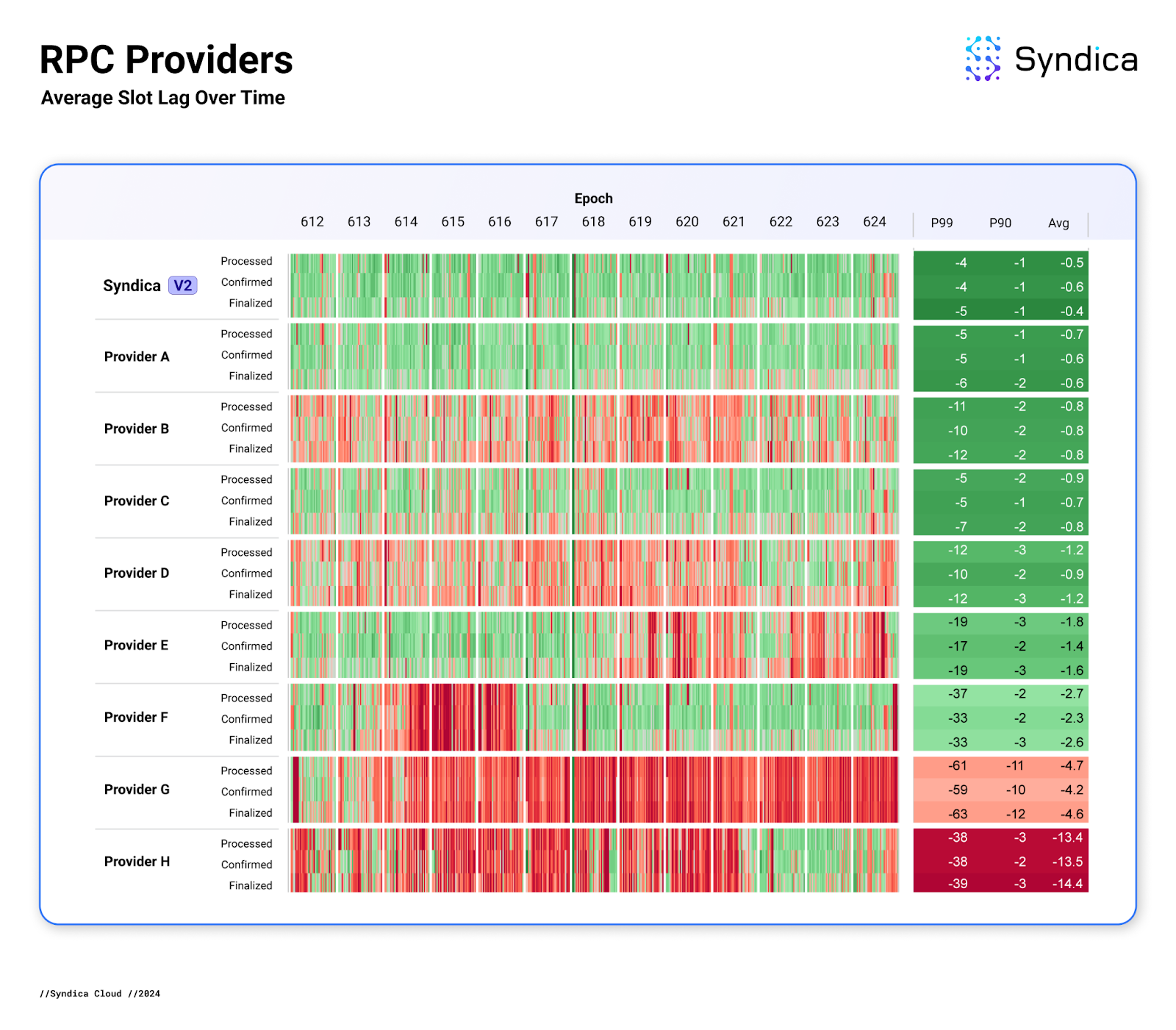

Multi-Region

In Syndica Cloud, we’ve implemented multi-region support with automatic geo-proximity routing. Our initial rollout covers us-east (Virginia), us-west (Oregon), and eu-west (London). Now, using api.syndica.io, requests are automatically directed to the nearest region to ensure the lowest possible latency. We compared RPC latency across all of the top Solana RPC providers to verify that our V2 is best-in-class:

Custom-Built Node Hardware

RPC Node Configuration

We optimized our node configurations alongside new hardware to maximize performance and reliability. Leveraging our RPC node operating experience, we've contributed upstream to the validator repository, including a significant memory usage improvement that was merged in this PR: https://github.com/anza-xyz/agave/pull/1137 (original PR with technical details here: https://github.com/solana-labs/solana/pull/34955).

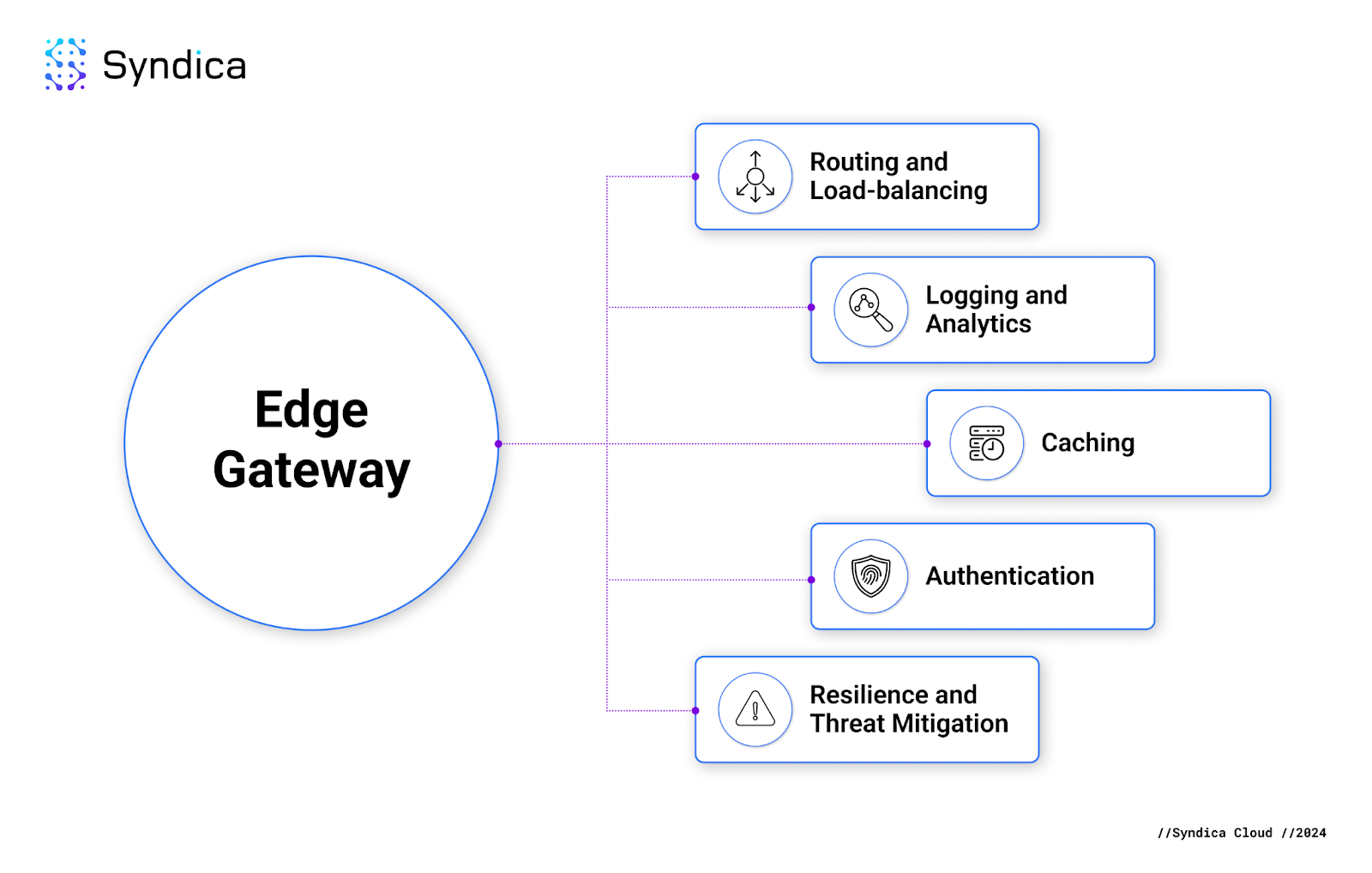

Edge Gateway: Our new custom-built API gateway

Edge Gateway is our API routing service for RPC requests, ensuring fast and reliable performance. It balances requests across healthy RPC nodes and maintains uptime, even during cloud or hardware outages, making it the backbone of our RPC infrastructure.

Reimplementation in Rust

Our original API routing service was implemented in Go, which had its advantages, but to truly maximize performance and minimize maintenance overhead, we reimplemented it in Rust. Rust provides superior control over allocations, performance, and error handling, allowing us to catch more issues at compile time and ensure optimal performance.

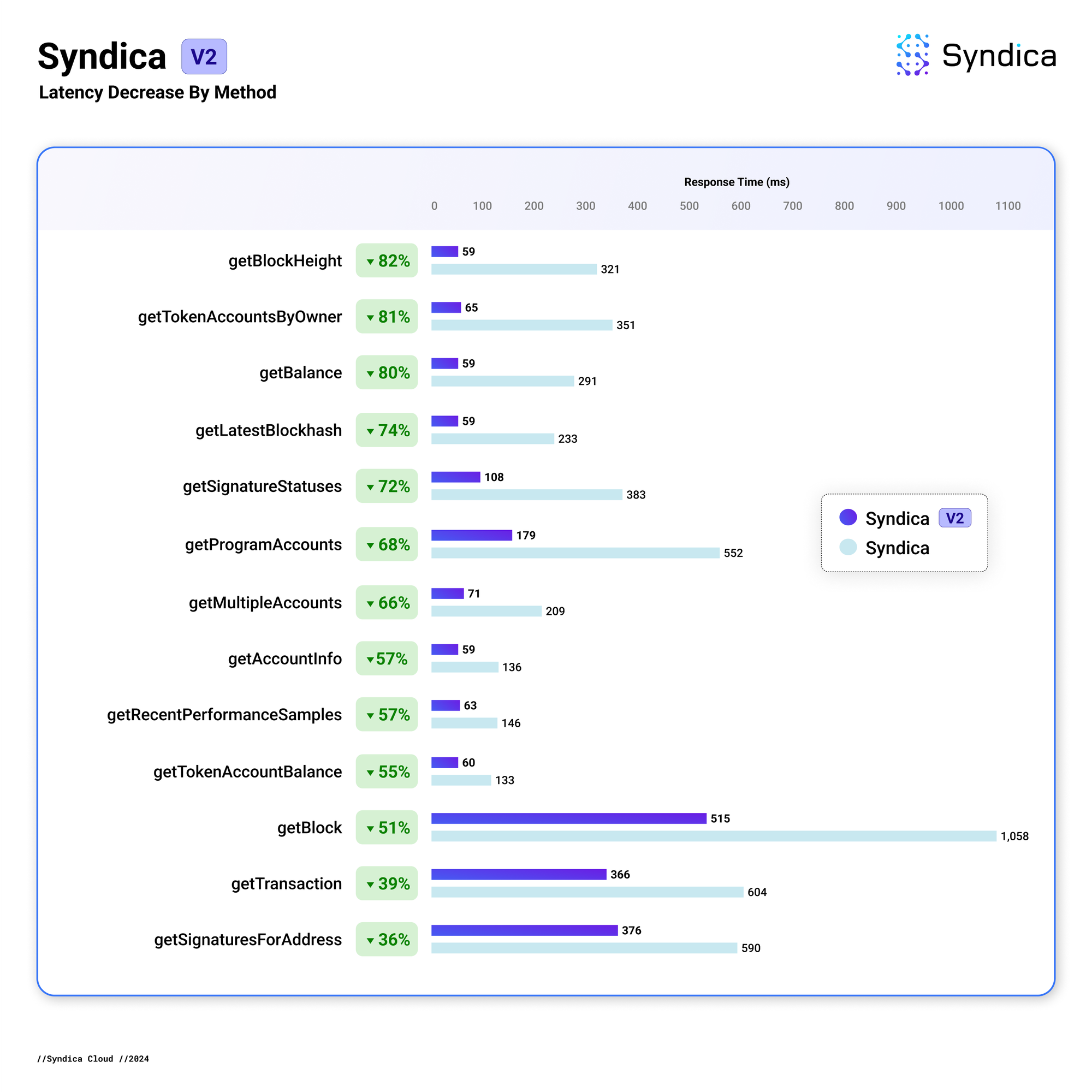

We tested our V2 implementation on different RPC methods using a variety of parameters and found response times to be about 2.5x faster on average:

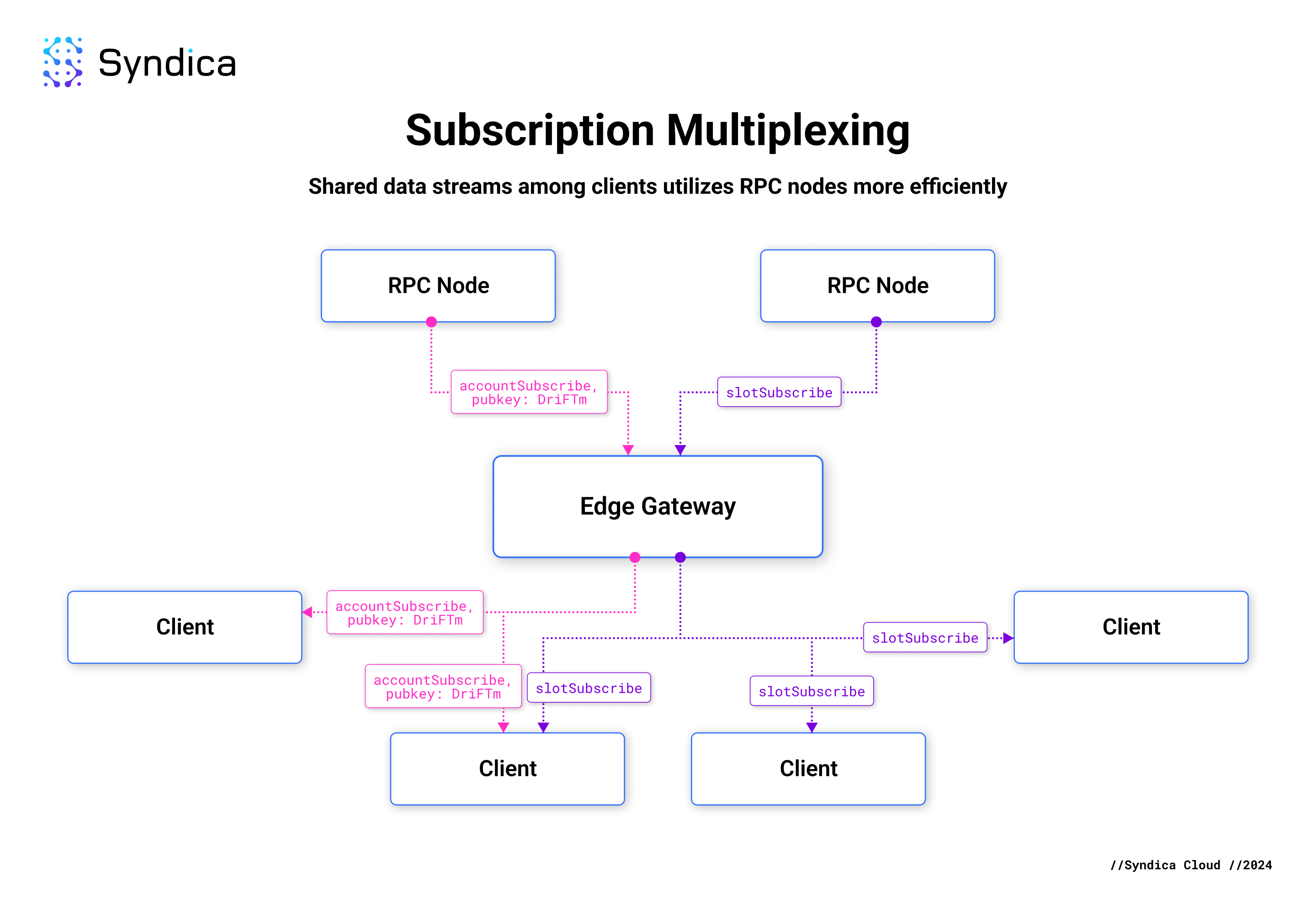

WebSocket Subscription Multiplexing

Subscription Multiplexing is the re-use of a single existing subscription from an RPC node to stream data to multiple identical client subscriptions. We implemented opportunistic subscription multiplexing to reduce subscription creation latency and related costs.

When a new subscription is requested, we quickly check if an identical subscription already exists with an upstream RPC node. If it does, it is reused without making new requests to the upstream nodes, allowing them to serve more requests with lower load and latency.

Multiplexing is applied opportunistically, meaning it occurs whenever a matching existing subscription is available. If no match is found, new subscriptions are created immediately without delay. In the worst-case scenario, the connection behaves like a standard, non-multiplexed subscription with no latency improvement. In the best-case scenario, multiplexing reduces latency by eliminating redundant requests.

This diagram shows an example where one upstream RPC node slotSubscribe subscription streams data for three identical client slotSubscribe subscriptions, and one upstream RPC node accountSubscribe subscription for Pubkey DriFTm3wM9ugxhCA1K3wVQMSdC4Dv4LNmyZMmZiuHRpp streams data for two identical client accountSubscribe subscriptions.

Requesting Node Affinity: Specify sending all RPC requests to single/multiple nodes or a dynamic strategy

Edge Gateway functions as a reverse proxy and load balancer for RPC nodes, ensuring that sequential requests from the same client to api.syndica.io are distributed across multiple RPC nodes for optimal performance and reliability.

Typically, this load-balancing behavior ensures requests are evenly distributed across nodes, minimizing latencies. However, there are times when directing sequential RPC requests to the same node is beneficial for a "sequentially consistent" view of the network. Due to the inherent latency in how RPC nodes propagate information to each other, different nodes may not always agree on the latest data. Directing sequential requests to a single node mitigates this issue, providing a consistent view of the network's state.

For instance, when using the getSlot RPC method (https://solana.com/docs/rpc/http/getslot), sending repeated getSlot requests to the same RPC node will always return a value greater than or equal to the previous one as slot numbers continually increment. However, sending these requests to different RPC nodes can result in receiving a slot number lower than the previous one due to discrepancies in slot generation between nodes, with some nodes potentially lagging behind others.

Our RPC API allows node affinity assignment via the X-Syndica-Affinity header in request headers, enabling precise control over request routing. This feature ensures that sequential requests will be processed by the same node, providing consistency and control. For more details, see our documentation here.

Upgraded Compression Support

We enhanced our response compression capabilities by adding support for br (brotli) while maintaining gzip compression via the standard Accept-Encoding header in HTTP requests.

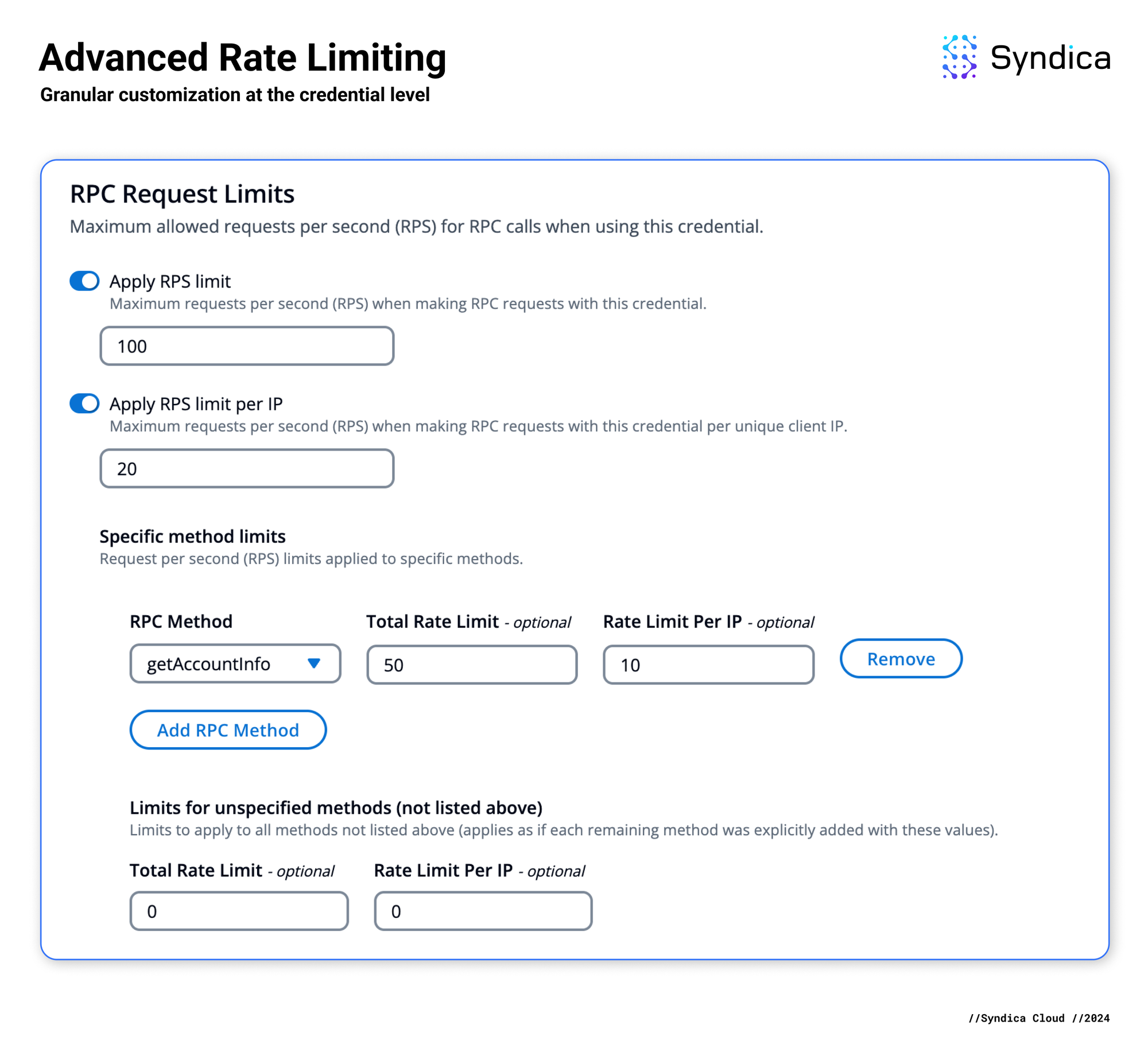

Overhauled Rate Limiting

We completely revamped our rate-limiting system to enhance performance and enable more granular customization at the credential level. You can now configure API Keys with separate limits for RPC calls, WS connections, and subscriptions.

You also have the option to set separate limits for different RPC methods and apply limits at the individual IP level. For detailed information, please refer to our documentation at https://docs.syndica.io/platform/concepts/api-keys.

Stay tuned for an in-depth blog post on this impactful new feature and its significance for decentralized applications (dApps).

Internal Tests, Metrics, and New Developments

Internally we developed extensive metrics and rigorous testing protocols to ensure API stability and continually enhance performance through iterative updates. Our ongoing infrastructure improvements are designed to reduce latencies and boost overall network accessibility significantly.

We developed a dedicated service called Gateway Tester for continuous integration testing. This tool constantly runs in our clusters, making requests and verifying expected behavior. It prevents API regressions and serves as an additional monitor for our services.

We unified our metric aggregation and monitoring to cover every layer of our infrastructure: cloud services, hardware nodes, Kubernetes, containers, and all code/web services.

We developed a comprehensive suite of alerts for staging and production environments that leverage the full range of new metrics from our internal services and API requests.

We expanded our infrastructure to ensure minimal to no service degradation across the platform (website, dashboards, APIs, etc.) during updates. While we have always prioritized this, the transition to Edge Gateway has enhanced our ability to maintain seamless service.

Altogether, this solid foundation ensures we remain the leader in RPC infrastructure.

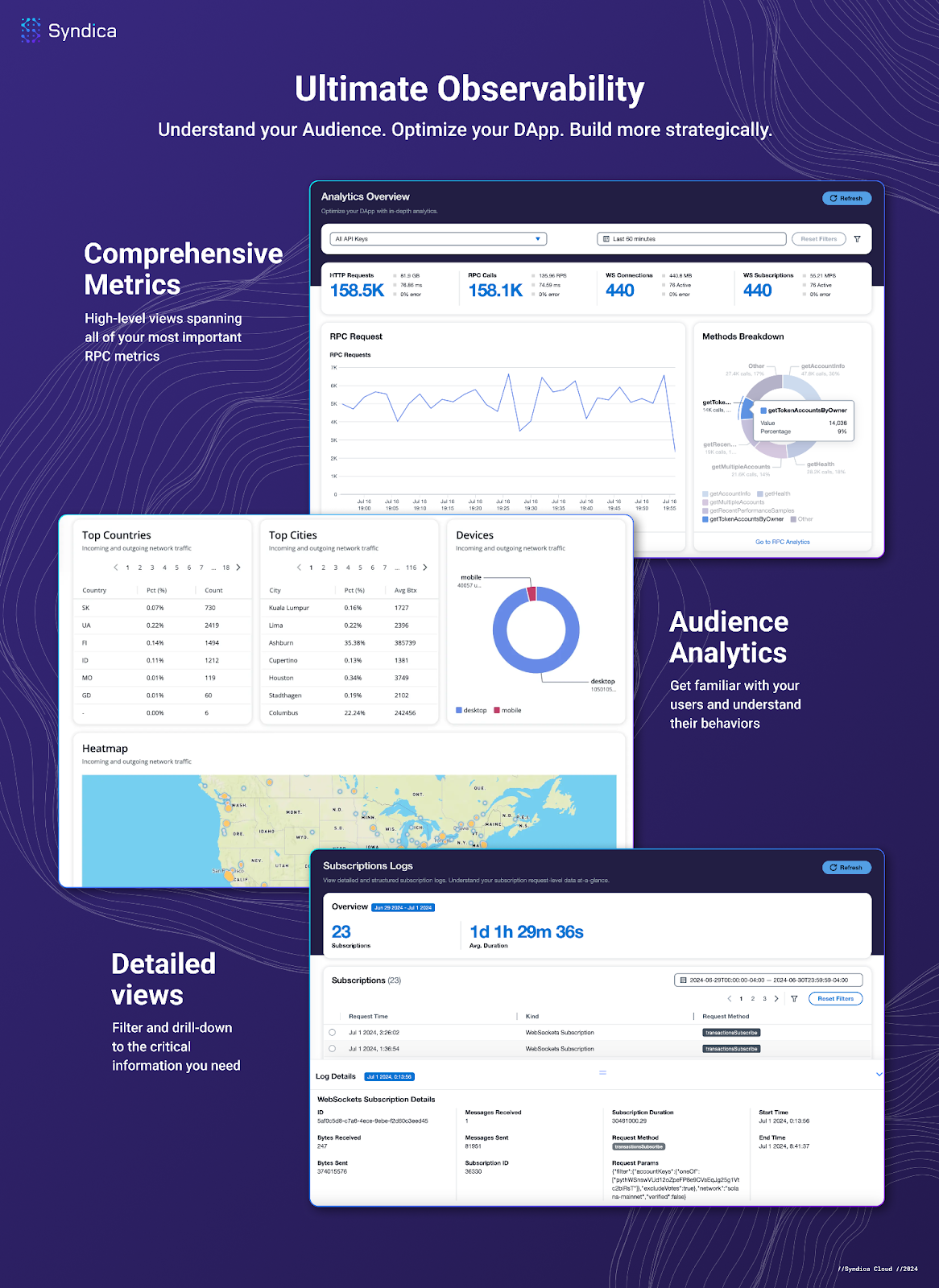

Enhanced Metrics & Insights

Metrics play a crucial role in gaining real-time insights, whether you're analyzing data usage or investigating latencies for specific methods. We reimagined our metrics system to enhance usability and performance, ensuring streamlined access to essential information.

Query Performance

We completely redesigned our metrics system to support real-time dashboards and rapid aggregations, capable of handling billions of requests per month with lightning-fast loading times. Now, users can easily view breakdowns by method, errors, latencies, and more without experiencing page load delays.

API Namespace Organization

Logs and metrics are now equipped with seamless filtering by namespace (solana-mainnet, chainstream, etc.) and have been architected to support all Syndica APIs moving forward. We aim to eliminate unnecessary page navigation and unfamiliar UIs by providing a unified information hub. For instance, whether using RPC infrastructure or accessing ChainStream data, reviewing metrics will feel completely familiar and intuitive.

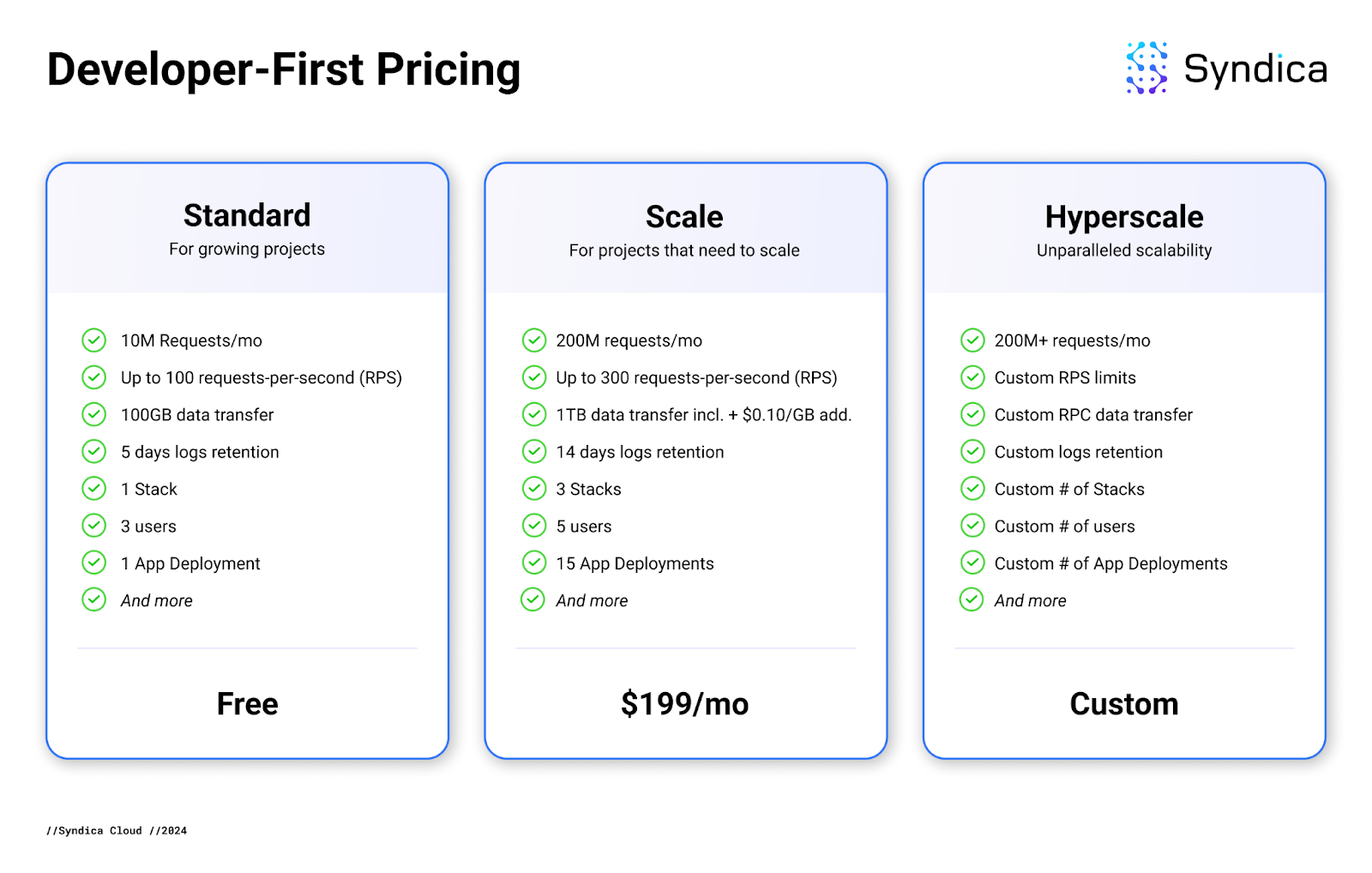

Developer-First Pricing

We've reworked our pricing to ensure we have the best-in-class free tier, which includes up to 10 million free RPC calls.

In addition to reimplementing our core infrastructure, we have optimized our pricing to better serve both individual developers and premium enterprises.

Get started today for free on Standard Mode. You’ll get 10M free RPC calls per month at up to 100 requests-per-second (RPS) and one free app deployment. No access fee is required.

When your app is ready to scale, upgrade to Scale Mode. For $199, you’ll get 200M RPC calls per month, 300 RPS, and 1TB of data transfer. Sign up today and enable Scale Mode to get 3 months free, worth $600 in credits.

Large enterprises seeking premium white-glove service in Solana infrastructure should inquire about our Hyperscale Mode. We will set you up with custom limits for higher RPC volume and RPS, dedicated support, unparalleled reliability, and a user-focused orientation. On Hyperscale, you can focus entirely on growing your business.

We've unified every aspect of the web3 development stack into one seamless platform. Now, you can forget about juggling multiple RPC providers, hosting services, or other Solana-specific needs—we'll handle everything for you.

Get started quickly today for free, and check out our documentation here.